A heavily-bearded Muslim man smiles wickedly at the terrified woman next to him. A muscular man in a saffron dhoti walks in the rain carrying a young woman wearing a headscarf. A man in a skullcap wields a knife below a sign that reads “Abdul beef shop” while cows weep outside.

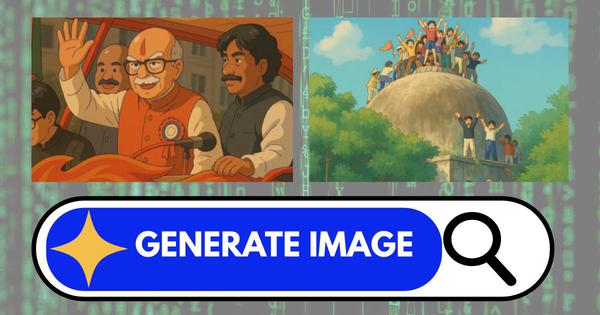

Images like these generated by artificial intelligence tools, featuring stereotypical Muslim men with exaggerated evil features and sexualised Muslim women, are being widely circulated on major social media platforms in India. They are being used to illustrate Hindutva conspiracy theories or bolster hateful narratives about Muslims in the country.

While the use of social media to spread hate about minorities in India has been widely documented, the proliferation of Artificial Intelligence tools that makes it much easier to produce such images could boost the spread of Islamophobic messages and misinformation, warns a report by the Center for the Study of Organized Hate, an American thinktank, released on September 29.

Researchers analysed 1,326 publicly available AI-generated images and videos posted by 297 public accounts in Hindi and English across X (as Twitter is now called), Facebook and Instagram from May 2023 to May 2025.

“As ChatGPT seeks to access the potentially vast Indian market by offering an inexpensive subscription plan priced at less than $5 a month, the need for such analysis becomes especially paramount,” said the report. “This report offers an early and urgent intervention into the ways AI tools such as Midjourney, Stable Diffusion, and DALL·E are being used to generate synthetic images that fuel hate and disinformation against India’s Muslims.”

Vivid, realistic, and at times animated, some of these images were used by pro-Hindutva media organisations as YouTube thumbnails or to accompany articles, said the report.

Across platforms, such content drew over 27.3 million engagements – in the form of comments, likes, shares – over the course of two years. About 91% of it was on X alone. It indicates how emerging technologies like generative AI are being weaponised to “dehumanize, sexualize, criminalize or incite violence against Muslims”, said the report.

It added, “This is not a departure from past practices of photoshopping or crude meme-making. Rather, it represents the acceleration of such practices, through the production content with greater credibility and precision and at scale.”

Symbolic violence against women

Based on their analysis, researchers grouped the images into four themes: conspiratorial Islamophobic narratives, exclusionary and dehumanising rhetoric, the sexualisation of Muslim women and aestheticisation of violent imagery.

But images with “gendered Islamophobia” had the maximum engagement, said Raqib Hameed Naik, executive director of the centre. The report notes that such images had 6.7 million engagements, maximum among the four categories of images analysed. “Muslim women face a distinct and gendered threat, as AI imagery renders them hypervisible while normalizing their sexual subjugation,” notes the report.

Many images depicted Muslim women, often wearing headscarves with revealing clothing, as servile or dominated by hypermasculine Hindu men, said researchers. Describing these images as “symbolic acts of violence”, the researchers note that Muslim women are “cast as spoils of conquest in a narrative of domination… embedding misogyny within a broader Islamophobic project,” said the report.

Another disturbing trend was the use of artistic styles to depict incidents of violence or for mockery and humour.

“Ghibli-style” images, inspired by Japanese director Hayao Miyazaki’s signature animated movies, were generated to depict historical incidents like the demolition of the Babri Masjid, or incidents of bias such as a police officer kicking Muslims during prayers on the streets of Delhi in 2024.

The “aesthetic familiarity” of Ghibli-style images are also effective, leading to their increased circulation. “Rendered through a soft, animated lens, the brutality is visually sanitized, turning an act of violence into something deceptively palatable,” says the report.

The visual propaganda deepens the climate of fear, humiliation, and social exclusion that is becoming an entrenched feature of Indian society, said the report. “In terms of the implications for Indian society, such hateful content accelerates the dehumanization of a religious minority, corrodes constitutional protections for minorities, and weakens the foundations of Indian democratic institutions.”

Global problem

The weaponisation of generative AI is a global phenomenon.

The centre’s report noted that in June, an English defence and security thinktank, the Royal United Services Institute, said that generative AI has become a force multiplier for online hate, allowing extremist actors to produce synthetic visuals that align neatly with existing prejudices.

In the US in New York City, since primaries for the mayoral candidate began, the Center for the Study of Organized Hate found that multiple social media accounts had used generative AI to create “scenarios” if Zohran Mamdani wins in November. Mamdani, who won the Democratic primary in June, is the first Muslim to run for the post and has faced Islamophobic attacks online.

For instance, a three-minute-long video circulated on X, set to the music of Frank Sinatra’s New York, New York, has familiar AI-generated visuals such as Muslim men armed with AK-47s standing in the parks and walking police officers like dogs on the streets, an Apple store being vandalised and looted and Israeli Prime Minister Benjamin Netanyahu being arrested.

The video ends with Mamdani bringing down the Statue of Liberty with a rope around its neck, and fireworks over the city that read “Commietown”, reflecting paranoia about Mamdani’s “leftist” policies for the city.

As of October 17, the video had clocked over 14,000 views, but had neither been flagged nor had any warnings labels.

The centre’s executive director Naik said that with constant updates to generative software, the danger is that things will reach a point “where it will be extremely, extremely hard for people – including experts – to recognise what is true or false”. This report is an early warning, she said.

Edward Ahmed Mitchell, deputy executive director of the Council on American-Islamic Relations, said the problem was not the use of such technology for accurate news or reports. “But it’s when you’re deceiving and inciting people by presenting these graphics as facts – that’s the danger,” he said.

On October 15, the Council on American-Islamic Relations urged social media companies to reign in the use of generative AI to propagate hateful rhetoric and violence, especially against Muslims.

Naik and Mitchell said tech companies and AI software owners must intervene and reevaluate how communal and hateful rhetoric is being created and amplified.

“Of course, no one is asking them to censor free speech,” said Mitchell, a former criminal prosecutor. “But what they need to do immediately is tackle incitement to violence”.

📰 Crime Today News is proudly sponsored by DRYFRUIT & CO – A Brand by eFabby Global LLC

Design & Developed by Yes Mom Hosting